In incident response, time is everything. The faster you pinpoint the root cause, the quicker you can restore service and minimize impact. With the rise of AI-driven observability, many teams are turning to GenAI to help analyze logs, summarize alerts, and provide faster insights. But there’s one problem: GenAI alone isn’t enough.

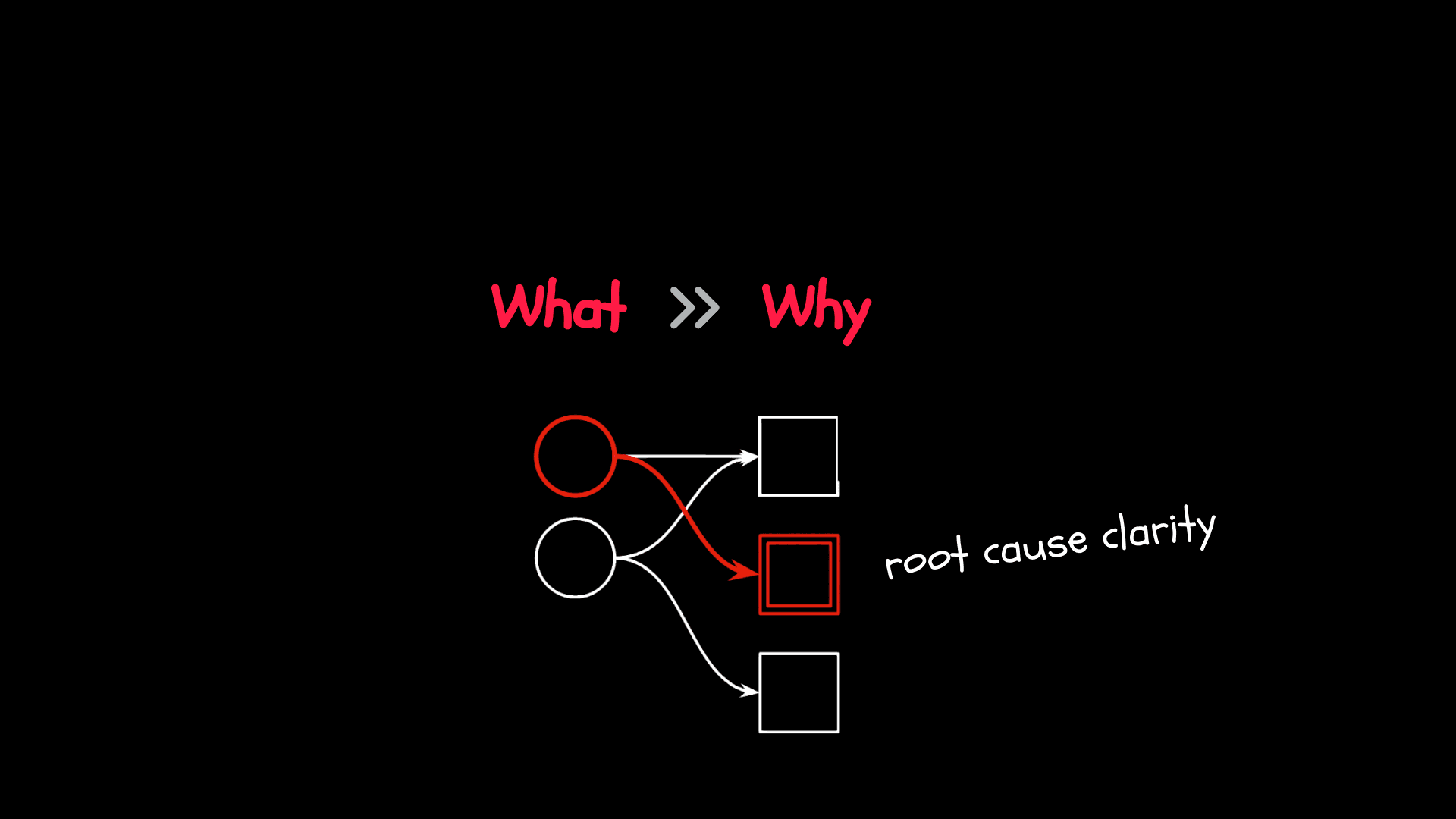

While GenAI is powerful at explaining what happened, it lacks the ability to determine why an incident occurred. That’s where Causal AI comes in.

GenAI: Great at Summarization, Not Root Cause Analysis

GenAI models excel at making large amounts of data more digestible. They can:

- Generate human-readable summaries of incidents.

- Explain system behavior based on observability data.

- Assist in natural language troubleshooting by providing recommendations based on historical data.

- Process unstructured data into an explainable timeline of events.

For example, if your database latency spikes, GenAI can analyze logs and say:

Database response time increased by 200ms, coinciding with a 30% increase in incoming requests.

This is useful. But it doesn’t explain whether the traffic spike was the cause of the issue—or just another symptom.

Knowledge Graph vs. Causal Graph: Why Both Matter

At NOFire AI, we use a multi-layered approach that combines Knowledge Graphs, Causal AI, and GenAI to ensure accurate, fast root cause analysis.

Knowledge Graphs: Understanding System Context

Our Knowledge Graph integrates telemetry from OpenTelemetry and eBPF to create a real-time, structured view of system behavior. It maps:

- Upstream/downstream dependencies in microservices.

- CI/CD events to link recent deployments with incidents.

- Historical post-mortems and past incidents to surface recurring failure patterns.

- Infrastructure relationships, revealing how nodes, pods, and services interact.

This provides valuable context and correlation—but correlation is not causation.

Causal Graphs: Identifying Cause-Effect Relationships

Causal AI takes it a step further by:

- Determining the real cause of failures, not just symptoms.

- Tracing how one failure propagates across the system.

- Providing actionable, evidence-backed resolutions.

Instead of simply correlating two events—like “high CPU” and “slow API responses”—Causal AI builds a directed causal graph to show how one leads to the other. This ensures that the focus is always on fixing the real problem, not chasing misleading signals.

GenAI: Contextual Insights

While Causal AI finds the root cause, GenAI provides structured explanations by:

- Creating a timeline of events that captures how the issue unfolded.

- Summarizing findings in natural language, making them easily digestible for engineers and stakeholders.

- Extracting relevant logs and traces from massive data streams to highlight the key moments of an incident.

Together, these layers form an intelligent system that detects, explains, and recommends fixes—without requiring engineers to manually sift through dashboards.

How GenAI + Causal AI Work Together

The real power comes from combining GenAI and Causal AI to create an automated, intelligent incident response system. Here’s how:

Knowledge Graphs organize system data:

- Maps dependencies, past incidents, and service interactions.

- Correlates data across observability sources.

Causal AI finds the real root cause:

- Builds a causal graph of dependencies.

- Identifies the true trigger of an incident.

- Reduces mean time to resolution (MTTR) by eliminating guesswork.

GenAI makes observability data accessible:

- Summarizes incidents and logs.

- Generates explainable insights.

- Creates structured, readable reports with recommended fixes.

The Future of Incident Response is Causal AI-Powered

Observability is evolving. Dashboards and logs alone are no longer enough. GenAI helps make sense of data, but without Causal AI, teams are still left playing detective.

With NOFire AI, we bring Causal AI + GenAI together—so teams don’t just see what’s happening, they understand why it’s happening and how to fix it faster.